… and what can happen if snapshot utilization is left

unchecked!

This post is essentially a continuation of Understanding

NetApp Snapshots (was thinking to call it part II except this subject

deserves its own title!) Here we’ll see what happens when snapshots exceed

their snapshot reserve, and what can potentially happen if snapshots are

allowed to build up too much.

At the end of the aforementioned post, we had our 1024MB

volume with 0MB file-system space used, and 20MB of snapshot reserve used. The

volume has a 5% snapshot reserve (for completeness: it is also

thin-provisioned/space-guarantee=none, and has a 0% fractional reserve.)

Filesystem

total used avail capacity

/vol/cdotshare/

972MB 0MB 972MB

0%

/vol/cdotshare/.snapshot

51MB 20MB 30MB

40%

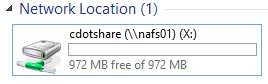

Our client sees an empty volume (share) of size 972MB (95%

of 1024MB).

Image: Empty Share

What does the client see if we increase the snapshot reserve

to 20%?

::> volume modify cdotshare

-percent-snapshot-space 20

Image: Empty Share

but less user visible space due to increased snapshot reserve

Our client sees the volume size has reduced to 819MB (80%

of 1024MB).

So, we now have 204MB of snapshot reserve with 20MB of

that reserve used.

::> df -megabyte

cdotshare

Filesystem

total used avail capacity

/vol/cdotshare/

819MB 0MB 819MB 0%

/vol/cdotshare/.snapshot 204MB 21MB

183MB 10%

What happens if we overfill the snapshot reserve to say

200% (408MB) by adding data, creating snapshot, and then deleing all the data?

What does the client see?

Step 1: Add ~380MB to the share via the client

Step 2: Take a snapshot

::> snapshot create

cdotshare -snapshot snap04 -vserver vs1

Step 3: Using the client, delete everything in the share

so the additional 380MB is also locked in snapshots

So, our snapshot capacity is now at 200% (that’s twice its

reserve) and the “df” output shows there’s only 613MB available user capacity.

Filesystem

total used avail capacity

/vol/cdotshare/

819MB 205MB 613MB

25%

/vol/cdotshare/.snapshot 204MB 410MB 0MB

200%

The 613MB available user capacity is confirmed via the

client, even though volume is actually empty.

Image: Share now

occupied but not by active file-system data

Image: The share

says it is empty!

If you ever get questions like “my volume’s empty but

something’s using up my space - what is it?” now you know what the answer might

be - snapshots going over their reserve!

::> snapshot show

cdotshare

Vserver Volume Snapshot

State Size Total% Used%

-------- ------- ----------- -------- -------- ------ -----

vs1 cdotshare

snap01 valid 92KB

0% 15%

snap02 valid 20.61MB

2% 98%

snap03 valid 184.5MB

18% 100%

snap04 valid 205MB

20% 100%

To take it to the furthest, I could completely fill up

the volume with snapshots (by adding data, taking a snapshot, deleting data) and

then there’s no space for any active file-system - that is - the user will see

an empty but completely full volume! Or - better put - a volume empty of active

user data but otherwise full due to snapshot retention of past

changes/deletions.

Image: Empty but

completely full volume!

Filesystem total used

avail capacity

/vol/cdotshare/ 819MB 818MB 0MB

100%

/vol/cdotshare/.snapshot

204MB 1022MB 0MB

499%

What are (some)

ways to manage snapshots?

1) The volume should be sized correctly for the amount of

data that’s going to go in it (keeping in mind the need for growth), and the

snapshot reserve should be of a size that will contain the changes (deletions/block

modifications) over the retention period required.

2) The snapshot policy should be set appropriately:

::> volume modify -vserver

VSERVER -volume VOLUME -snapshot-policy POLICY

Note: Changing the

snapshot policy will require manual deletion of snapshots that were controlled

by the previous policy.

3) Consider making using of these space and snapshot

management features:

::> volume modify -vserver

VSERVER -volume VOLUME -?

-autosize

{true|false}

-max-autosize

{integer(KB/MB/GB/TB/PB)}

-autosize-increment

{integer(KB/MB/GB/TB/PB)}

-space-mgmt-try-first

{volume_grow/snap_delete}

Monitoring,

Events and Alerting

OnCommand Unified Manager (OCUM) is available and free to

use - OCUM can monitor your snapshot reserve utilization levels and much more!

Finally -

Fractional Reserve

Going back to our example, what would it look like if we

set the fractional reserve to 100%?

The answer is no different:

::> volume modify

-vserver vs1 -volume cdotshare -fractional-reserve 100%

::> df -megabyte

cdotshare

Filesystem

total used avail capacity

/vol/cdotshare/

819MB 818MB 0MB

100%

/vol/cdotshare/.snapshot 204MB 1022MB 0MB

499%

The volume is still full of snapshot with no space for

active filesystem data!

If you want to

learn more about what Fractional Reserve is (I’m not going to make a mess of

explaining it here), this looks like a good place to start:

Comments

Post a Comment