Below are some

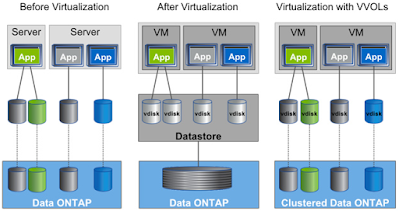

research links and notes I jotted down regards the topic of, VVOLs,

Oracle Database, Performance, and ONTAP. I should strongly point out

that I am not an Oracle expert, I am also not particularly

expert on many other things too! This is just my way of getting some

thoughts and ideas together.

1)

[VVOLs & Oracle] A Cracking VMware Whitepaper:

Virtualizing

Oracle Workloads with VMware vSphere Virtual Volumes on VMware Hybrid

Cloud | REFERENCE ARCHITECTURE

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/solutions/oracle/vmware-oracle-on-virtual-volumes.pdf

From

a performance perspective I didn't get much from this whitepaper, but

it is great that it exists, since it validates an Oracle on VVOLs

approach.

2)

[Oracle & Performance & ONTAP] Words of Steiner:

Jeffrey

Steiner is the Oracle on ONTAP king. I couldn't find anything

relating to VVOLs on his blog but there is this:

NetApp

NVMe for your database

https://words.ofsteiner.com/2018/08/24/netapp-nvme-for-your-database/

Down

the bottom of the post he presents some statistics from an actual AWR

report, and you see what NVMe-oF can do.

Alas, VVOLs are not

currently supported on NVMe-oF as per:

Requirements

and Limitations of VMware NVMe Storage

3)

[Oracle & Performance] Oracle AWR:

I

know little about Oracle AWR, but this PDF slide-deck looks very

interesting:

Oracle:

Using Automatic Workload Repository for Database Tuning: Tips for

Expert DBAs

https://www.oracle.com/technetwork/database/manageability/diag-pack-ow09-133950.pdf

4)

[VVOLs & Performance & ONTAP] NetApp Verified Architecture:

NetApp

Verified Architectures are always worth a look. No Oracle is this

NVA, but a little about VVOLs, a little about performance, and of

course a big bit about ONTAP.

Modern

SAN Cloud-Connected Flash Solution: NetApp, VMware, and Broadcom

Verified Architecture Design Edition: With MS Windows Server 2019 and

MS SQL Server 2017 Workloads

https://www.netapp.com/pdf.html?item=/media/9222-nva-1145-design.pdf

The

'4.5 Workload Design' section talks about:

“We

used the 3SB tool to create an 800GB SQL Server database. We

spread the database across five 200GB VMDK files, and one additional

200GB VMDK to handle the database log activity.

For the FCP environment, we deployed six

250GB LUNs on each of the 14 SQL Server hosts.

We created one

LUN per volume and one VMDK per LUN.

For the NVMe/FC environment, we created three 1.46TB namespaces per

SQL Server host, but used two VMDKs per namespace.”

The

'5.1 Test Methodology' section talks about:

“As

ONTAP systems are designed for multiple workloads and tenants, best

performance is obtained when at least four FlexVol volumes are used

per node.”

5)

[Performance & ONTAP] Justin Parisi:

There

are a few important things to understand about ONTAP &

Performance, this is covered well by Justin Parisi in these links:

Volume

Affinities: How ONTAP and CPU Utilization Has Evolved

https://blog.netapp.com/volume-affinities-how-ontap-and-cpu-utilization-has-evolved/

NetApp ONTAP FlexGroup Volumes: Top Best Practices: TR-4571-a

https://www.netapp.com/pdf.html?item=/media/17251-tr4571a.pdf

NetApp

ONTAP FlexGroup Volumes: Best Practices and Implementation Guide:

TR-4571

https://www.netapp.com/pdf.html?item=/media/12385-tr4571pdf.pdf

A

couple of extracts:

“In

ONTAP 9.4 and later (high-end platforms):

-

Each volume has one affinity.

-

Each aggregate has eight affinities

-

Nodes have a maximum of 16 affinities.

One

member volume per available affinity is created. In ONTAP 9.4 and

later, up to 16 member volumes per node are created in a FlexGroup

volume.”

“To

support concurrent processing, ONTAP assesses its available hardware

at startup and divides its aggregates and volumes into separate

classes called affinities. In general terms, volumes that belong to

one affinity can be serviced in parallel with volumes that are in

other affinities. In contrast, two volumes that are in the same

affinity often must take turns waiting for scheduling time (serial

processing) on the node’s CPU.”

6)

[VVOLs & ONTAP] Rebalancing VVOLs Datastores:

This

link has good info in:

Rebalancing

vVols datastores

https://docs.netapp.com/vapp-98/index.jsp?topic=%2Fcom.netapp.doc.vsc-iag%2FGUID-C9FEA419-BDDF-4B9B-837C-0D64CC136FA3.html

“All

vVols associated with a virtual machine are moved to the same FlexVol

volumes”

7)

[VVOLs & ONTAP] Flexpod Design Guides:

Flexpod

desing guides are always useful because of the high amount of

technical detail. Not particularly useful for my research but there

is a bit of VVOLs on ONTAP in here:

FlexPod

Datacenter with VMware vSphere 7.0 and NetApp ONTAP 9.7

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/fp_vmware_vsphere_7_0_ontap_9_7.html

SUMMARY

/ CONCLUSION

From

this little bit of research, sonething becomes apparent. If you're

after the most extreme Oracle performance you can get, then VVOLs are

not going to be the best option:

1)

VVOLs are not supported by VMware on NVMe-oF, so you can't benefit

from NVMe-oF.

2)

All VVOLs associated with a virtual machine are in the same FlexVol,

so you can't benefit from using multiple volume affinities.

So

you need to ask yourself, is the performance you get from your Oracle

on VVOLs testing sufficient for your needs?

If

it is then excellent, if not then don't use VVOLs and instead benefit

from NVMe-oF and spreading your workload over multiple volume and

aggregate affinities.

PS: "Vdbench is a command line utility specifically created to help engineers and customers generate disk I/O workloads to be used for validating storage performance and storage data integrity. Vdbench execution parameters may also specified via an input text file.":

https://www.oracle.com/downloads/server-storage/vdbench-downloads.html

PPS: Something also to bear in mind from this NetApp Documentation:

Unified Manager 9.7 Documentation Center (netapp.com)

"When there is minimal user activity in the resource, the available IOPS value is calculated assuming a generic workload based on approximately 4,500 IOPS per CPU core."

Comments

Post a Comment